Representation

Data representation refers to the format in which data (information) is stored, analysed, and processed by devices, such as computers, smartphones, wearables, and medical devices used in hospitals.

The challenge of representation within the context of AI and healthcare is that computers can only process binary information (information that is stored as a series of 1s and 0s) and that information needs to be represented in binary code that is derived from data that might be collected and stored in different formats.

Data is transmitted within a computer by electrical signals that are either on or off (On = 1, Off = 0). When playing a digital video or writing a document, for example, that information must be converted into a binary format in order to be stored, processed and transmitted by a computer.

Data representation

- Each digit is known as a bit (binary digit)

- 4 digits is a nibble

- 8 digits is a byte

- In modern computing bytes are often counted in the millions (megabytes), billions (gigabytes) or more.

There are many ways of converting different information into binary digits and they tend to differ based on the type of data. For example:

- Numbers

The numbers 1, 2 and 3 read and written in the most commonly used number system (decenary, or ‘1 – 10’) would be represented as 0, 10, and 111 in binary code.

- Text

Text data is represented in binary using the ASCII system (American Standard Code for Information Interchange), Extended ASCII, or Unicode.

ASCII is the most basic. It uses seven bits and has a range of 128 characters including: 32 control codes, 32 punctuation codes, 26 Upper letter codes, 26 Lower letter codes, and the number digits 1-9. The capital letter A is represented as 1000001. The range of characters is quite small meaning it is only really capable of coping with British and American English.

Extended ASCII uses eight bits and has a range of 256 characters. This makes it useful for languages such as Spanish, French and German that have accents such as à or ü.

Unicode is a far more flexible character set. It uses 16 bits and has a character set of 65,000. It is most useful for representing languages such as Chinese and Arabic.

- Images and colours

The hexadecimal (or ‘hex’) system has 16 units: the numbers 0-9 and the letters A, B, C, D, E and F. It is commonly used to represent colours. For example, the colour red is represented as #FF0000 in hex.

Imaging data consists of pixels. Each pixel is represented by a binary number e.g., Black might = 0 and White might = 1. Colours require more bits for each pixel. Each extra bit included per pixel doubles the range of colours that are available for that pixel. This is known as the ‘colour depth.’ The more colour an image requires, the more bits per pixel will be needed, and the bigger the resultant file will be.

The challenge in the context of AI and healthcare is that the data that needs to be represented in binary code might be collected and stored in different formats. For example, the way in which symptoms, diagnoses, and prescriptions are recorded in electronic health records (EHRs) differs depending on the clinical coding terminology used. When combining information from different hospitals, if one hospital records ‘diabetes’ as code 123456 and another records it as 234567, the binary representation of these two codes would be different.

This means a computer would not be able to automatically understand it collectively as ‘diabetes data’ for the purposes of training an AI algorithm. This problem is growing in scale as the number of different data sources available for healthcare analysis is expanding to include data recorded by wearable devices, at-home sensors, and more.

Data representation refers to the format in which data is stored, analysed, and processed by electronic devices, such as computers, smartphones, wearables, and medical devices used in hospitals. To be machine interpretable, all healthcare data, be it structured, such as electronic health record (EHR) data, unstructured (such as free text notes), imaging (such as X-Ray), or other, needs to be represented as binary digits, i.e., as a series of 0s and 1s.

The challenge in the context of AI and healthcare is that the data that needs to be represented in binary code might be itself collected and stored in different formats. For example, the way in which symptoms, diagnoses, and prescriptions are recorded in EHRs differs depending on the clinical coding terminology used. Read codes are not the same as SNOMED codes, for instance.

This can cause a problem when it comes to representing the data in the same way for the purposes of aggregation, if, for instance one EHR records ‘diabetes’ as clinical code: 123456 and another records diabetes as clinical code 234567, then the binary representation of those two codes would be different even if they represent the same clinical concept. This problem is growing in scale as the number of different data sources available for healthcare analysis is expanding to include data recorded by wearable devices, at-home sensors, and more.

To overcome this issue, different types of data often need to be grouped, transformed, and curated (i.e., missing data handled) before they can be consistently represented in a computable format. This is an intense process and there is currently no agreed methodology, although a very large number of techniques do exist, some of which involve the use of AI algorithms to help with the data sorting and transformation process.

It is argued that it may be more efficient, and less prone to error, if there was instead a focus on making the data inputs (i.e., how data is recorded at the source) more standardised and homogenous, rather than having to deal with the transformation of heterogeneous data post-hoc.

An Owkin example

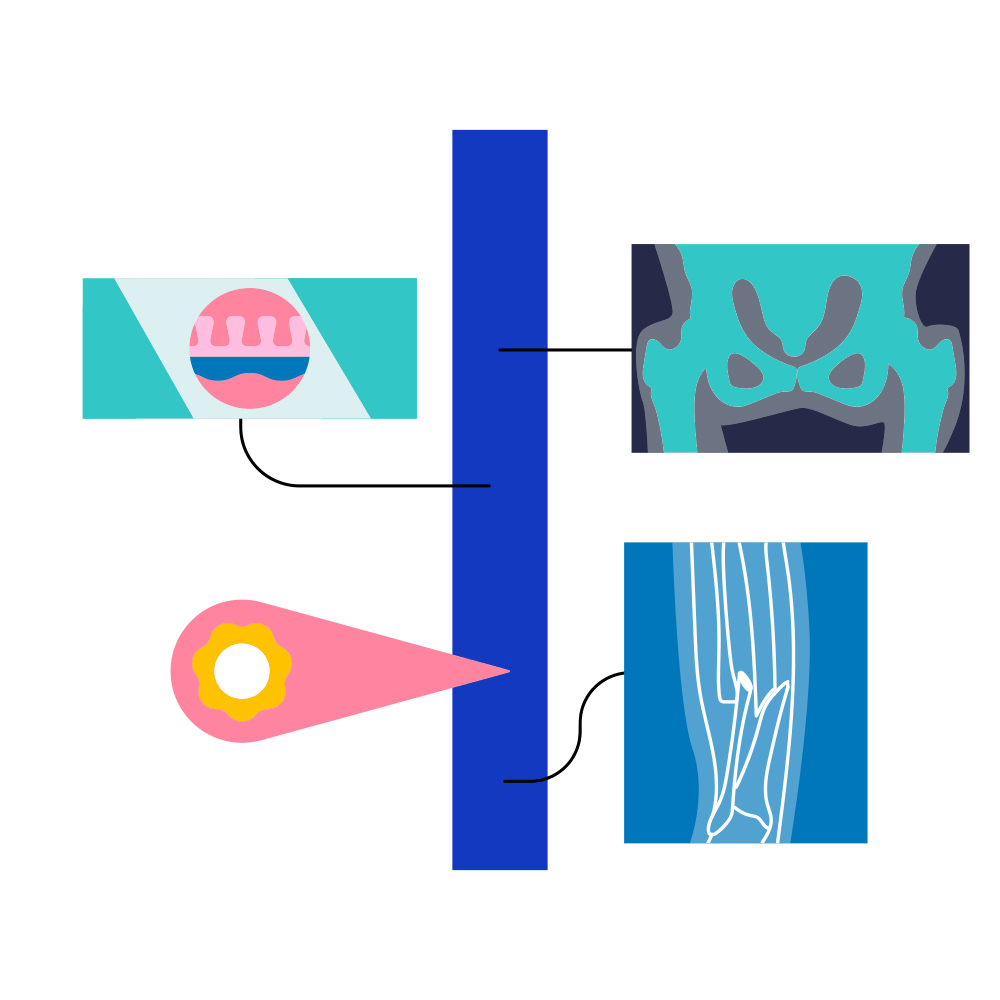

In July 2023, Owkin scientists used Self-Supervised Learning (SSL) to learn representations from histology patches - training machine learning models to understand which images have cancer related features and which do not.

We explored the application of Masked Image Modeling (MIM) using iBOT, a self-supervised transformer-based network, in combination with SSL. Our model Phikon, pre-trained on more than 40 million histology images from 16 different cancer types, code and features are publicly available on GitHub and Hugging Face.

Further reading